Products

Dyna Robotics

Dyna Robotics Turbocharges Foundation Model Training with Alluxio

Dyna Robotics, a cutting-edge robotics company, improved its foundation model training performance by deploying Alluxio as a distributed caching and data access layer. Facing I/O bottlenecks caused by high-volume video ingestion and limited NFS throughput, DYNA adopted Alluxio to eliminate PyTorch DataLoader starvation and unlock compute-bound, fault-tolerant model training across their GPU cluster. This significantly accelerated model iteration — a core business driver for DYNA — directly enabling faster commercial deployment and greater market agility.

About Dyna Robotics

Dyna Robotics is pioneering the next generation of embodied artificial intelligence, dedicated to making AI-powered robotic automation accessible to businesses of all sizes. The company’s mission is to empower businesses by automating repetitive tasks with intelligent robotic arms. With their breakthrough robotics foundation model, Dynamism v1 (DYNA-1), they enable robots to perform complex, dexterous tasks with commercial-grade precision and reliability.

(Source: Dynamism v1 (DYNA-1) Model: A Breakthrough in Performance and Production-Ready Embodied AI)

Dyna Robotics' initial product has already achieved commercialization with live customer deployments. Dyna Robotics continues to improve its foundational model by building efficient data collection infrastructure to collect data from both human demonstrations and real-world deployments.

The Challenge

Dyna Robotics’ training pipeline is data-intensive, relying heavily on video demonstration clips captured during human teleoperation and robot deployments. Like many robotics companies, DYNA generates tens of terabytes of new video data daily, which is first ingested into Google Cloud Storage (GCS).

To make this data accessible to training jobs, a CRON-based process synchronizes files hourly from GCS to an on-prem NFS server. This architecture introduced significant limitations:

- Severe I/O Starvation: The NFS backend was unable to sustain the high-throughput, low-latency demands of PyTorch DataLoader when processing massive volumes of small video and image files. Prefetch queues frequently ran empty, starving the training pipeline and leaving GPUs underutilized.

- Wasted GPU Cycles: With training jobs blocked on I/O, workloads became I/O-bound rather than compute-bound. This led to inefficient GPU utilization and inflated cloud costs, undermining the economics of scaled model development.

- Redundant and Inefficient Data Path: The hourly GCS-to-NFS synchronization introduced a duplicated data path and created a chokepoint. The NFS layer itself added unnecessary operational overhead, consuming resources while offering limited performance benefits under DYNA’s small file-heavy workload. Meanwhile, fast SSD resources were underutilized, sitting idle on the GPU servers.

These infrastructure limitations triggered a cascading impact on business velocity. Training bottlenecks delayed model iteration, which slowed product refinement, impeded downstream application development, and ultimately pushed out commercial rollout timelines and revenue realization. In a competitive robotics landscape, this latency in iteration risked eroding DYNA’s first-mover advantage.

The Solution: Alluxio as a Distributed Caching Layer

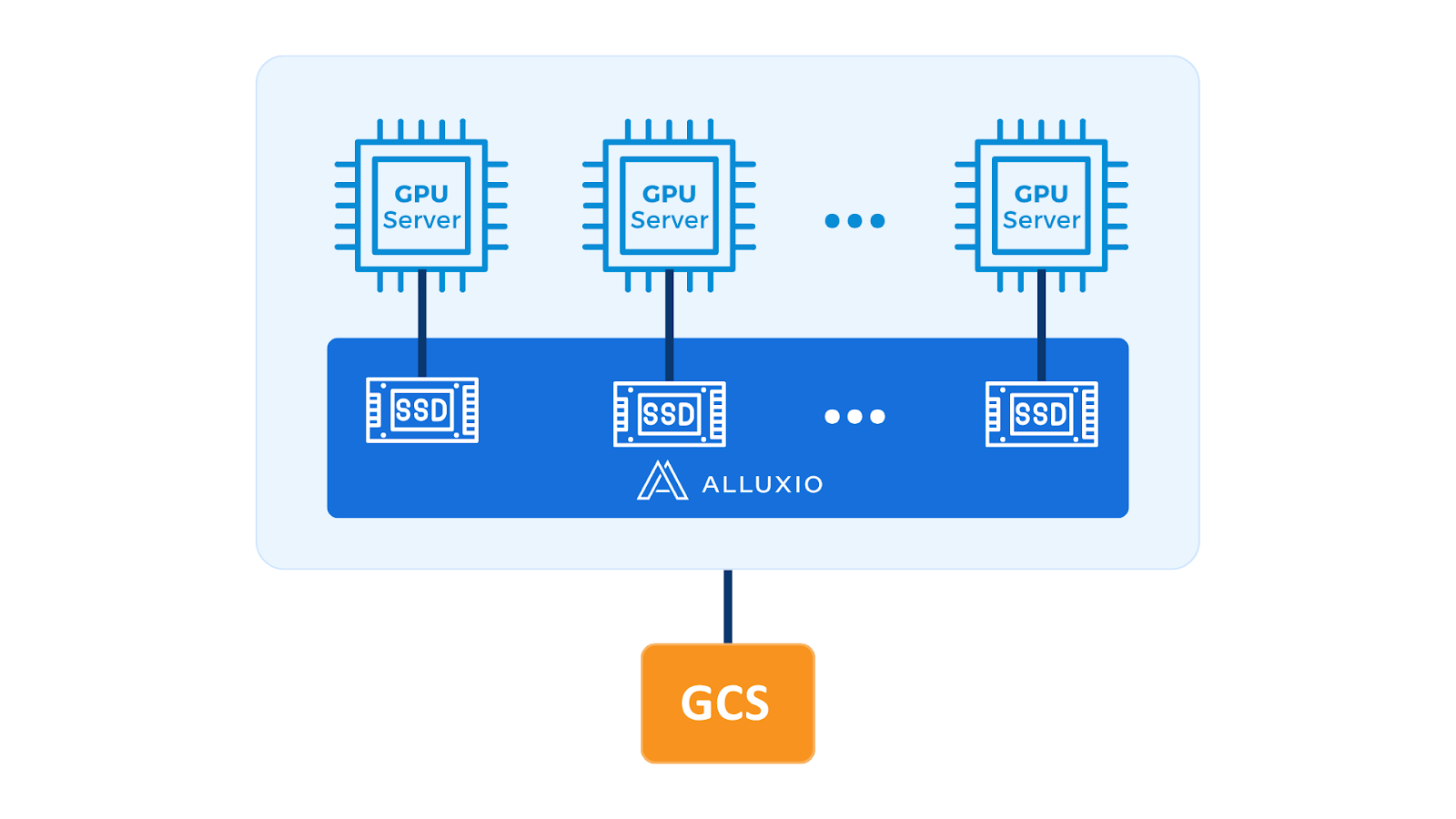

To address critical I/O bottlenecks and accelerate model iteration, Dyna Robotics adopted Alluxio as a distributed caching and unified data access layer across its GPU training infrastructure.

High-Throughput Cluster-Wide Caching: DYNA deployed Alluxio on its GPU servers to form a shared, distributed cache using existing but underutilized local SSDs. This approach avoided additional hardware spend while significantly boosting I/O throughput, resolving DataLoader starvation, and making sure training pipelines are always compute-bound.

Optimized for Small File Access: Alluxio’s architecture is well-suited for workloads with massive numbers of small files and high metadata pressure, such as DYNA’s training datasets composed of short video clips. This delivered much higher and more stable read performance than traditional NFS.

Seamless PyTorch Integration: With a POSIX-compliant interface, Alluxio dropped in as a replacement for NFS without requiring code changes. DYNA’s team saw immediate performance gains without modifying their PyTorch training pipelines.

Operational Robustness and Fallback Handling: Because Alluxio was co-located with GPU servers, node failures could take cache workers offline. However, Alluxio’s graceful fallback to object storage (GCS) ensured uninterrupted training, continuing at baseline throughput even during hardware faults.

Key Metrics and Values

Technical Values

- Training Acceleration: Accelerate foundation model training by up to 35% at times of high utilization.

- Operational Simplification: Transparent caching of hot data eliminates the operational complexity of managing multiple NFS servers and their data volume size limits.

- Fault Tolerance: Demonstrated resilience when a GPU machine failed unexpectedly, with training continuing without slowdown or data loss.

Business Impact

- Improved Product Readiness: More frequent model updates enabled faster quality improvements.

- Commercial Rollout Acceleration: Enhanced development velocity supported faster scaling toward commercial deployment targets.

Summary

By implementing Alluxio, Dyna Robotics has accelerated model iteration cycles, which directly translates to a competitive advantage in the rapidly evolving robotics market. “In the highly competitive landscape of embodied AI, having a high-performance, scalable, and reliable AI infrastructure is absolutely critical,“ explained Lindon Gao, CEO of Dyna Robotics. “Alluxio, as the data acceleration layer for our foundation model training infrastructure, has proven to be an extremely valuable partner in our journey to commercial success.”

.png)

Sign-up for a Live Demo or Book a Meeting with a Solutions Engineer

Additional Case Studies

No items found.